bagging machine learning explained

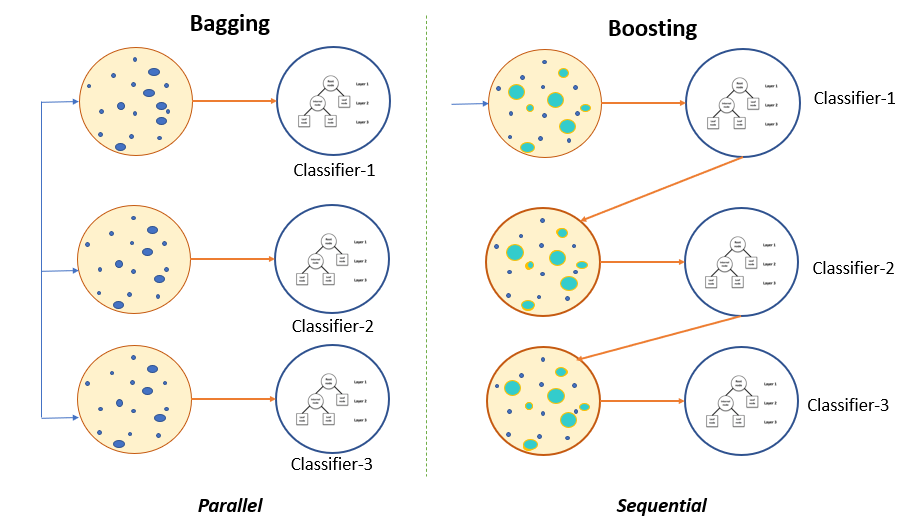

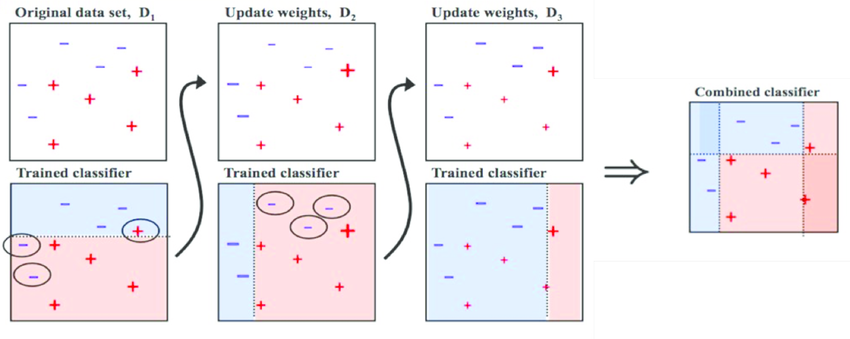

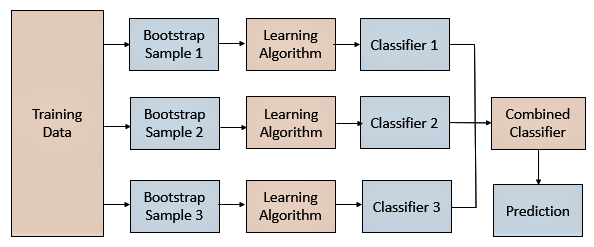

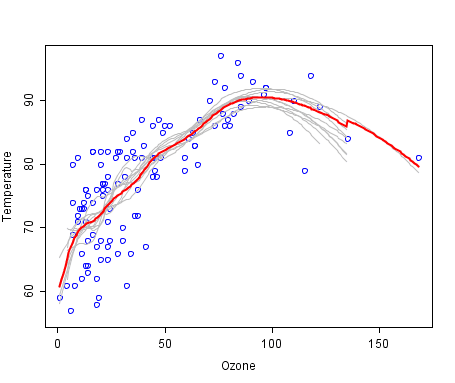

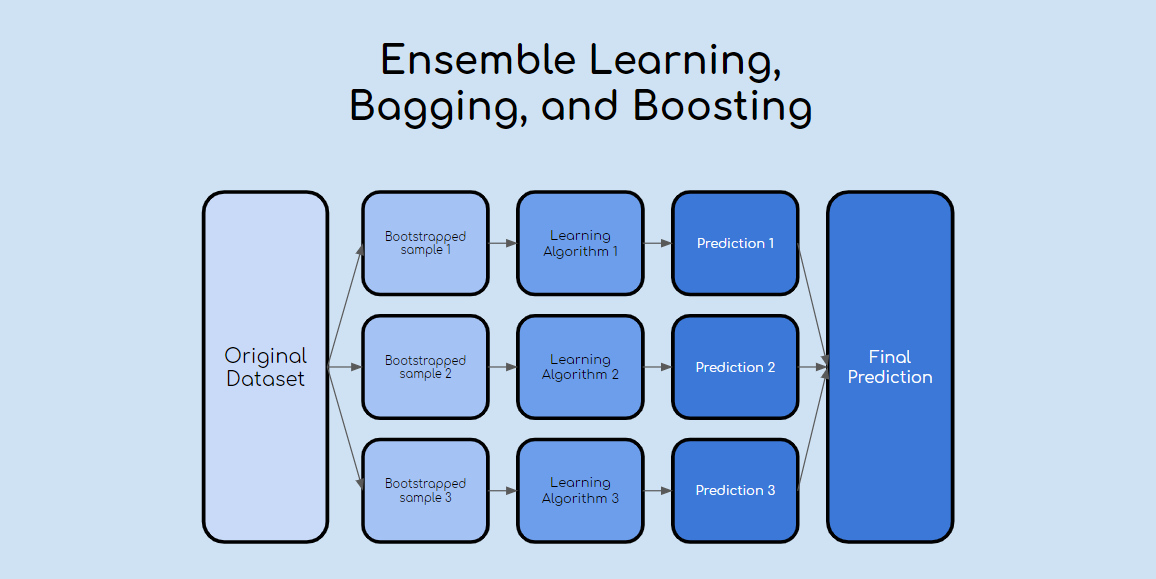

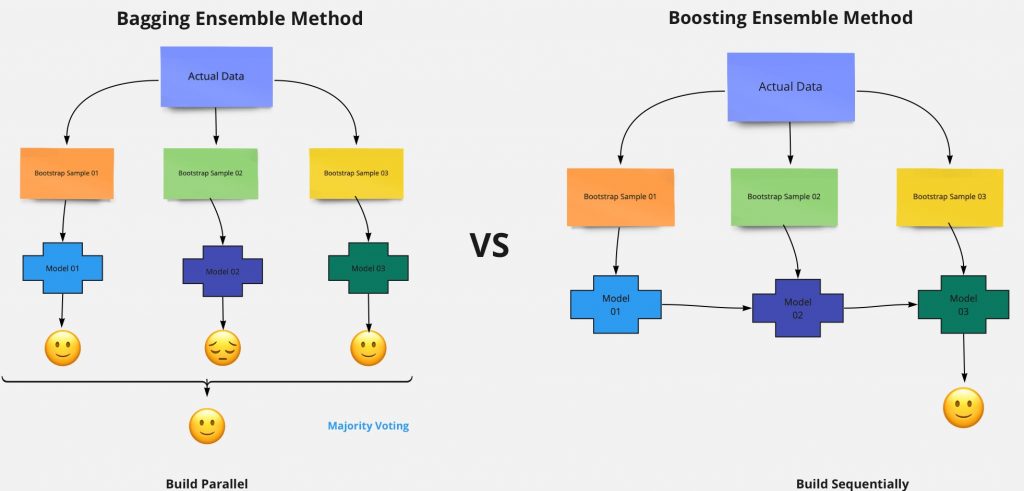

Bagging is the application of the Bootstrap procedure to a high-variance machine learning algorithm typically decision trees. Bagging is a parallel method that fits different considered.

Bagging Vs Boosting In Machine Learning Geeksforgeeks

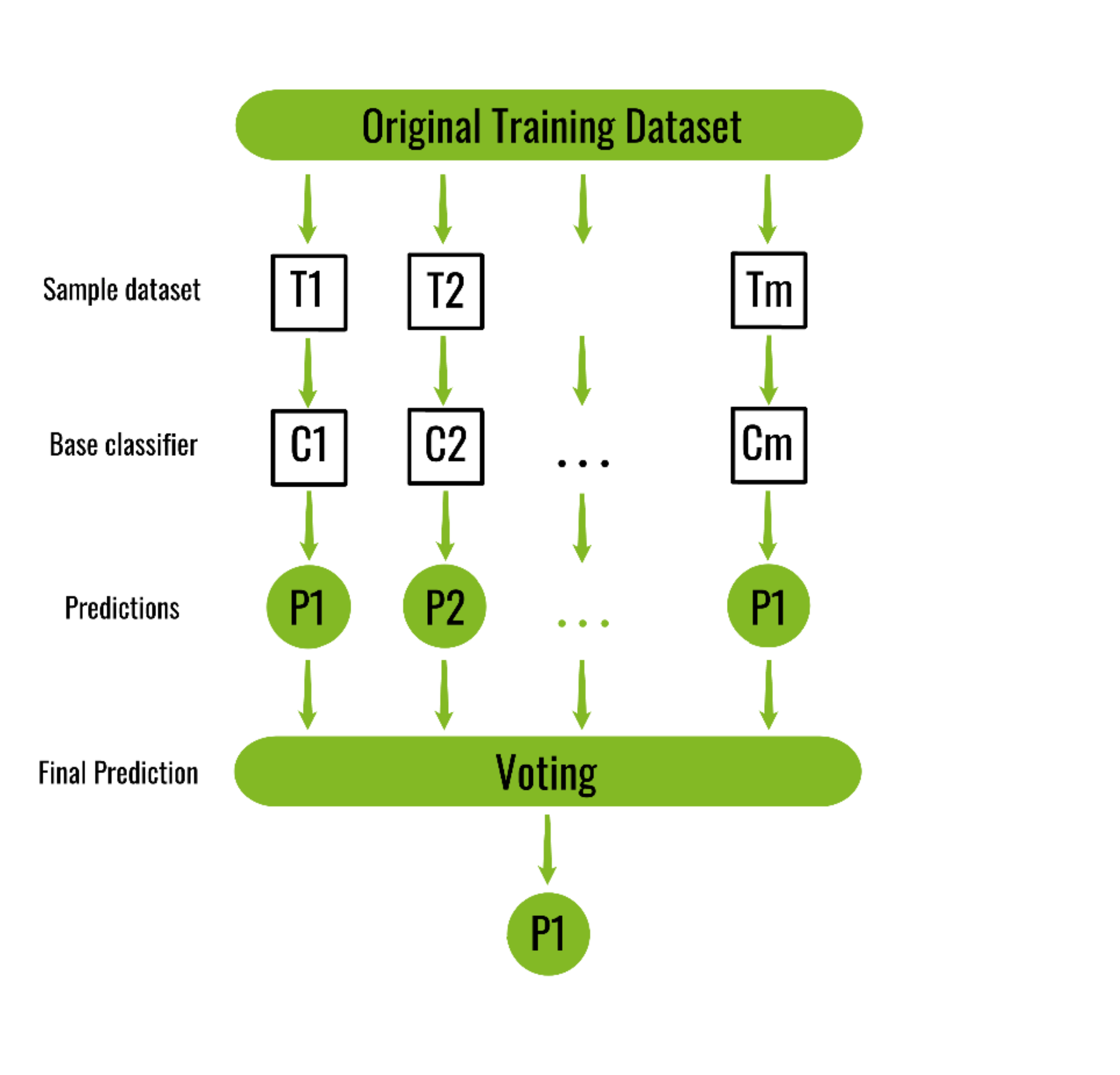

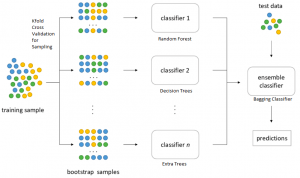

A Bagging classifier is an ensemble meta-estimator that fits base classifiers each on random subsets of the original dataset and then aggregate their.

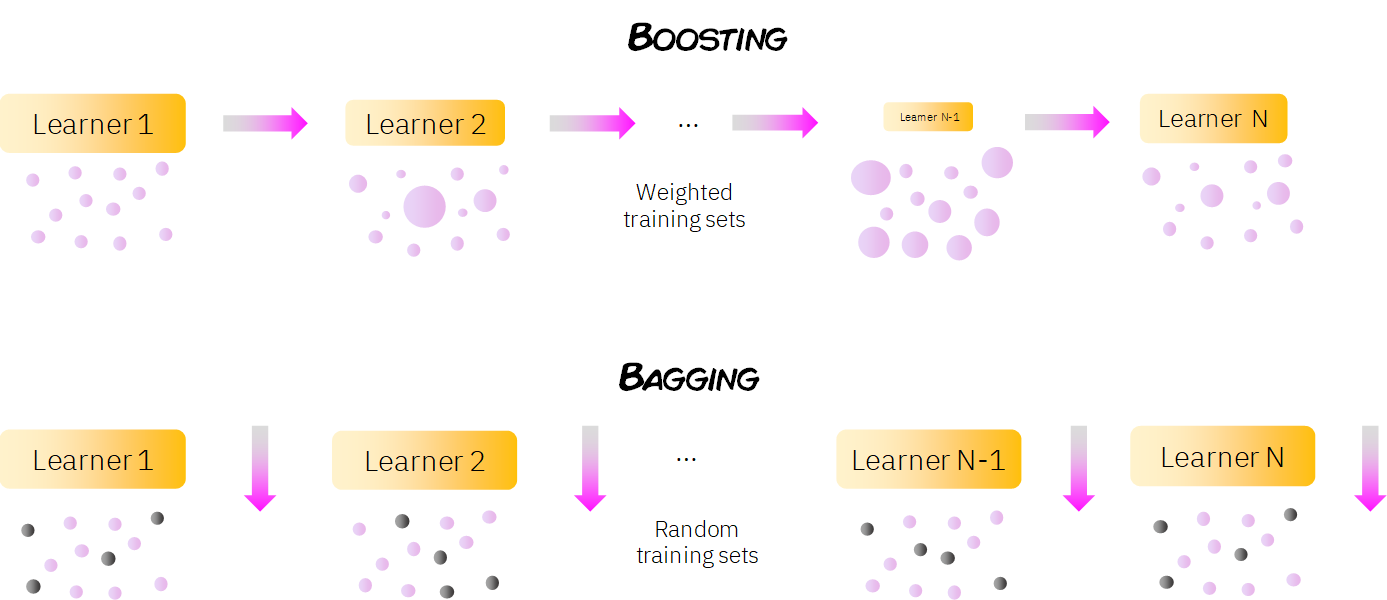

. Learn More about AI without Limits Delivered Any Way at Every Scale from HPE. Bagging is a powerful method to improve the performance of simple models and reduce overfitting of more complex models. The Main Goal of Boosting is to decrease bias not variance.

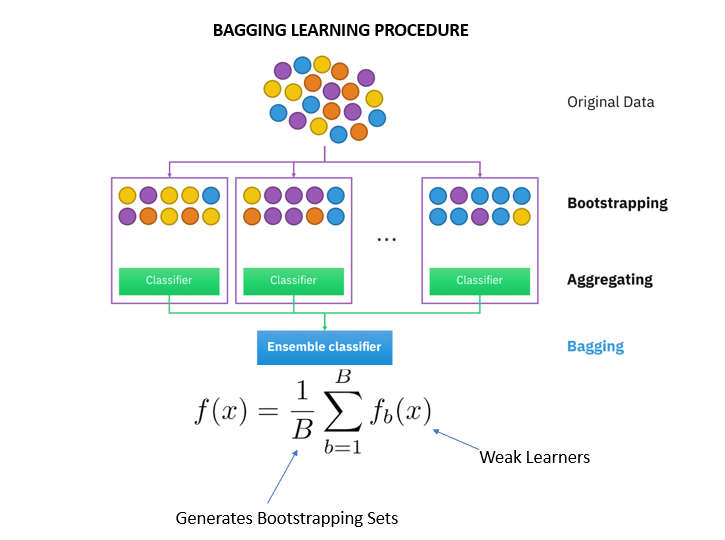

Ad Build your Career in Data Science Web Development Marketing More. Bagging B ootstrap A ggregating also knows as bagging is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning. ML Bagging classifier.

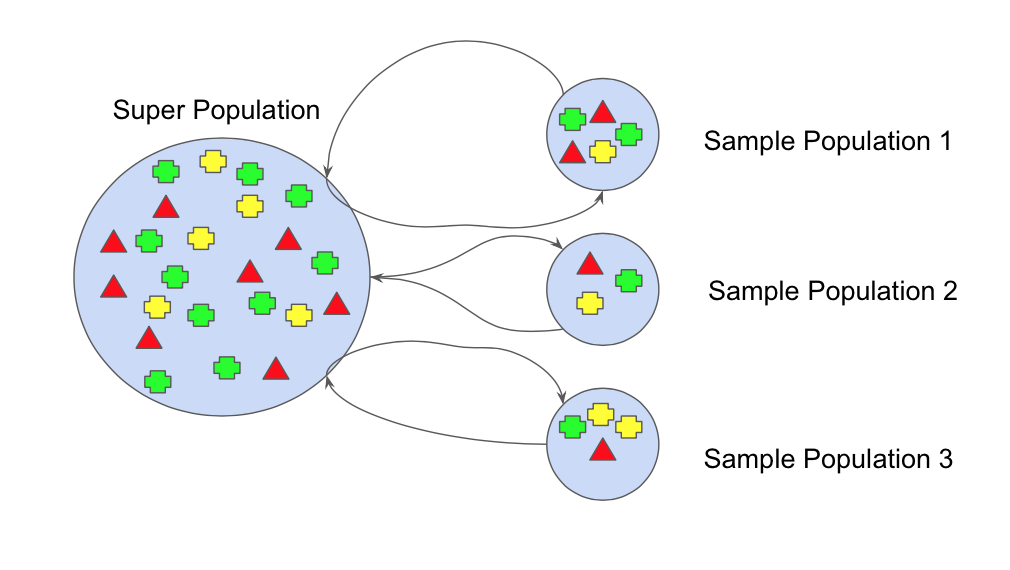

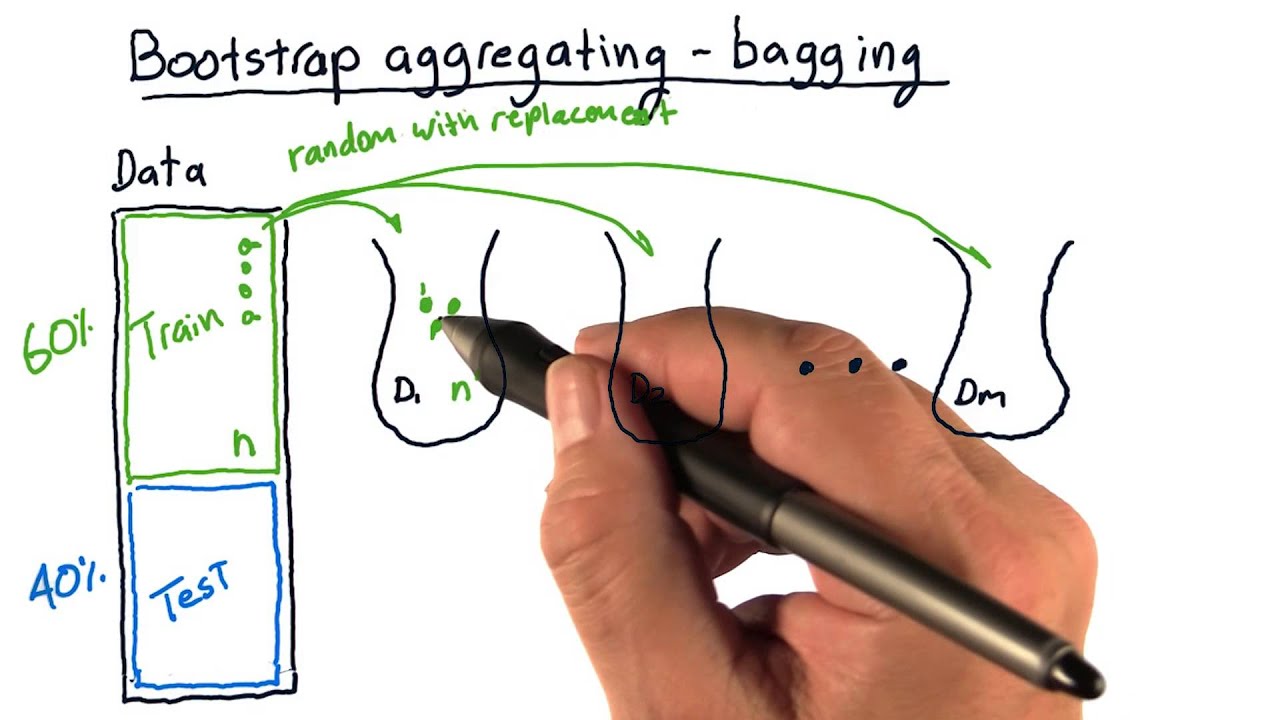

Bagging a Parallel ensemble method stands for Bootstrap Aggregating is a way to decrease the variance of the prediction model by generating additional data in the training. Ad State-of-the-Art Technology Enabling Responsible ML Development Deployment and Use. Invest 2-3 Hours A Week Advance Your Career.

The principle is very easy to understand instead of. Main Steps involved in bagging are. Bagging Vs Boosting.

Bagging also known as bootstrap aggregating is the process in which multiple models of the same learning algorithm are trained with bootstrapped samples of the original. Bagging is an acronym for Bootstrap Aggregation and is used to decrease the variance in the prediction model. There are mainly two types of bagging techniques.

The main takeaways of this post are the following. Flexible Online Learning at Your Own Pace. Ensemble learning is a machine learning paradigm where multiple models often called weak learners or base models are.

Bagging algorithm Introduction Types of bagging Algorithms. Lets assume we have a sample dataset of 1000. The Main Goal of Bagging is to decrease variance not bias.

Sampling is done with a replacement on the original data set and new datasets are formed. Lets see more about these types. Ad State-of-the-Art Technology Enabling Responsible ML Development Deployment and Use.

Ad Unravel the Complexity of AI-Driven Operations Create Your Ideal Deep Learning Solution.

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

Bagging And Boosting Explained In Layman S Terms By Choudharyuttam Medium

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

Bootstrap Aggregating Wikiwand

Ensemble Learning Explained Part 1 By Vignesh Madanan Medium

Ensemble Learning Bagging And Boosting By Jinde Shubham Becoming Human Artificial Intelligence Magazine

Bagging Vs Boosting In Machine Learning Geeksforgeeks

Boosting And Bagging Explained With Examples By Sai Nikhilesh Kasturi The Startup Medium

Bagging Bootstrap Aggregation Overview How It Works Advantages

A Tour Of Machine Learning Algorithms

Bagging Ensemble Meta Algorithm For Reducing Variance By Ashish Patel Ml Research Lab Medium

Mathematics Behind Random Forest And Xgboost By Rana Singh Analytics Vidhya Medium

Ml Bagging Classifier Geeksforgeeks

Bagging Classifier Python Code Example Data Analytics

Ensemble Learning Bagging And Boosting Explained In 3 Minutes

Bootstrap Aggregating Bagging Youtube

Learn Ensemble Methods Used In Machine Learning

Ensemble Learning Bagging Boosting

Boosting In Machine Learning Explained An Awesome Introduction